参考资料:

Android Volley完全解析(四),带你从源码的角度理解Volley

Volley 源码解析

知乎-如何去阅读Android Volley框架源码?

Volley学习笔记之简单使用及部分源码详解

Volley源码学习笔记

Volley的原理解析

Android Volley 源码解析(一),网络请求的执行流程

Volley简介

Volley是一款google开源的网络请求框架, 其实质是对Android的HttpURLConnectio或者HttpClient进行了封装(开了多个线程), 并加入了缓存功能, 因此比较适合高并发的网络请求, 但不适合大文件下载, Volley的官方github地址在这里

用法实例

1 | mRequestQueue = Volley.newRequestQueue(this); |

简单举个例子, 先获取一个requestQueue对象, 这个对象不需要每次请求时都获取, 一般只需要获取一次, 设置成app内全局单例或者activity的成员变量就可以了。然后new一个request对象, 然后把这个request加入到requestQueue就行了, 就这么简单。

大体概览

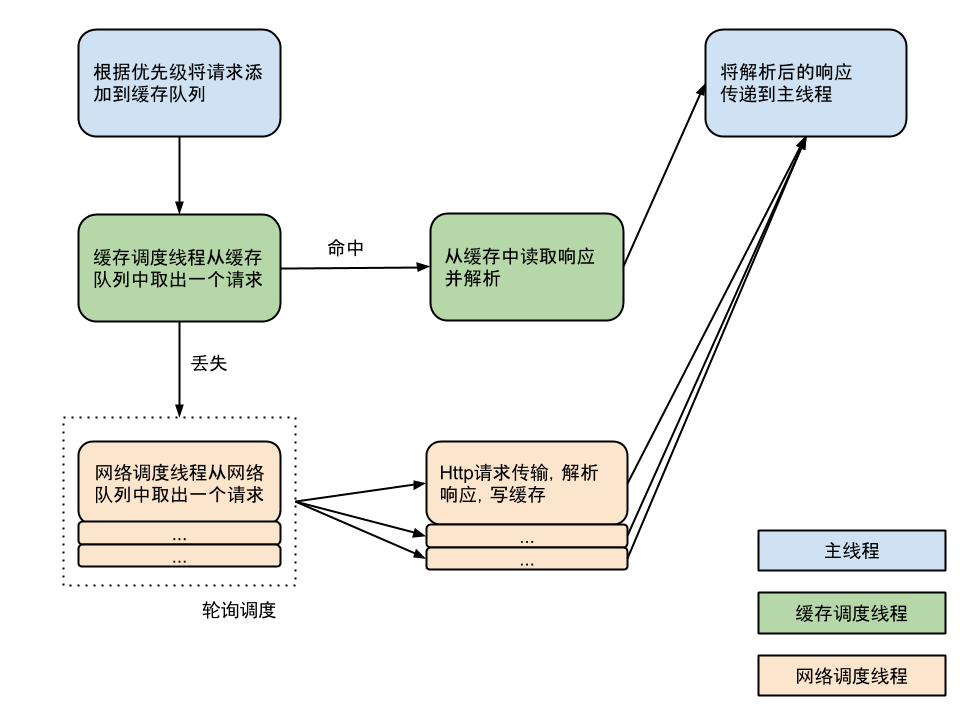

先附一张Volley的工作流程图, 懒得自己画了, 盗的图

有几个单词不认识, 还是翻译一下吧

再把Volley里比较重要的类列举一下

| 类名 | 类型 | 作用 |

|---|---|---|

| Volley | 普通类 | 对外暴露的 API,主要作用是构建 RequestQueue |

| Request | 抽象类 | 所有网络请求的抽象类,子类有StringRequest、JsonRequest、ImageRequest |

| RequestQueue | 普通类 | 存放请求的队列,子类有CacheDispatcher、NetworkDispatcher 和 ResponseDelivery |

| CacheDispatcher | 普通类 | 用于执行缓存队列请求的线程 |

| NetworkDispatcher | 普通类 | 用于执行网络队列请求的线程 |

| Cache | 接口 | 主要的子类是DiskBasedCache, 用于磁盘缓存 |

| HttpStack | 接口 | 子类有HurlStack和HttpClientStack, 分别使用HttpURLConnection和HttpClient处理http请求 |

| Network | 接口 | 子类有BasicNetwork, 调用 HttpStack 处理请求,并将结果转换成可被 ResponseDelivery 处理的 NetworkResponse |

| Response | 普通类 | 封装一个解析后的结果以便分发 |

| ResponseDelivery | 接口 | 子类是ExecutorDelivery, 分发请求结果, 并将其回调到主线程 |

创建RequestQueue

因为执行一个网络请求分三步, 第一步是创建RequestQueue, 第二步是创建request, 第三步是把request加入到RequestQueue。所以我们先来分析第一步

1 | mRequestQueue = Volley.newRequestQueue(this); |

点进去看看

1 | public static RequestQueue newRequestQueue(Context context) { |

这里创建了根据版本号创建了一个HttpStack对象。然后用BasicNetwork对HttpStack进行包装,构建了一个Network对象。Network对象是用来处理网络请求的。

可以看到, 在创建HttpStack对象的时候判断了一下手机的sdk版本号。如果sdk的版本大于等于9, 则创建基于HttpUrlConnection的HurlStack, 否则创建基于HttpClient的HttpClientStack。因为在Android2.3(SDK = 9)之前,HttpURLConnection存在一些bug,所以这时候用HttpClient来进行网络请求会比较合适。在Android 2.3版本及以后,HttpURLConnection则是最佳的选择。不过现在的手机基本上都是4.0以上的, 所以这里的if else直接看成走HurlStack的分支就行了。接着往下看。

先创建了一个默认的缓存文件夹, 然后利用传入的Network对象new了一个RequestQueue对象, 并调用start()方法。

先看一下这个RequestQueue的构造方法

1 | public RequestQueue(Cache cache, Network network) { |

为了看的更直观一点, 我把代码简化成这样

1 | public RequestQueue(Cache cache, Network network) { |

这里创建了一个length = 4的是NetworkDispatcher数组, 然后创建了一个ExecutorDelivery对象mDelivery。

主要说说mDelivery这个对象的作用, 看一下它的相关方法。

1 | public ExecutorDelivery(final Handler handler) { |

当调用mDelivery.postResponse()和postError()方法的时候, 调用的是mResponsePoster.execute()方法, 也就是调用handler.post(command)方法, 而这个handler可以从上面看到是和主线程绑定的, 所以会将response结果回调到主线程。所以说mDelivery这个对象主要是用于将子线程中的网络请求结果发送到主线程中处理。

思路拉回来, 让我们再回到RequestQueue, 看一下queue.start()方法的源码

1 | public void start() { |

首先确保所有的调度线层都已经停止, 然后创建了一个缓存调度线程, 然后开启这个线程。接着new了4个网络调度线程, 然后分别开启这4个线程。

对于这几个线程, 我们选取一个CacheDispatcher, 稍微看一下它的run()方法

1 |

|

里面有一个死循环, 所以这个线程是一只运行不会退出的。

另外提前”剧透”一下, processRequest()方法中的代码, 会阻塞在从缓存队列中取request那里(如果缓存队列为空的话), 直到取到request(缓存队列中添加进了新的request), 就会往下执行去处理这个request。

这些我们后面详细分析, 现在只需要有这样一个概念: 开启这几个线程之后会不断的从队列中取出request然后去处理。

RequestQueue.add(Request)

现在看把request加入到RequestQueue这个过程

1 | /** |

分以下这么几个步骤:

- 将当前request和处理request的queue进行关联,这样当该request结束的时候就会通知负责处理该request的queue

- 将request加入到mCurrentRequests这个hashSet中, 使用haseSet是为了去重。mCurrentRequests保存着所有被requestQueue处理的request

- 如果request不允许被缓存, 则把这个request放到网络请求的队列中

- 如果request允许被缓存, 那就把这个request加入到缓存的队列中

那么问题来了, request被加入到队列中会发生什么呢?它会被运送到哪里去呢?是什么时候被取出来的呢?答案就在CacheDispatcher和NetworkDispatcher。CacheDispatcher

先看CacheDispatcher的构造方法

1 | public CacheDispatcher( |

RequestQueue中的mCacheDispatcher是在调用start()方法的时候被new出来的, 从构造方法中可以看出, mCacheDispatcher得到了request中的mCacheQueue, mNetworkQueue, mCache和mDelivery(就是那个ExecutorDelivery)。

然后看run()方法

1 |

|

我们仔细看一下processRequest()这个方法中的代码。首先会从缓存队列中取出request, 如果为空则会阻塞在mCackeQueue.take()这一行不再走下去了。其实可以看到, 之前RequestQueue.add(Request)方法中添加的request是在这里被取出来了。如果被取出来的request这时候被取消了, 那就立即结束掉。然后根据request的url去获取缓存Cache.Entry, 如果缓存为空则将将该request添加到网络队列中。如果缓存不为空, 接着再判断缓存是否过期, 如果过期, 还是将这个request添加到网络队列。如果这个缓存没有过期, 就将这个缓存解析为response对象。接着判断这个缓存是否需要刷新, 如果不需要刷新, 直接用mDelivery(就是那个ExecutorDelivery)将结果回调到主线程; 如果需要刷新, 把response回调到主线程, 并且把request添加到网络队列。

NetworkDispatcher

接着看看这个NetworkDispatcher

1 | public NetworkDispatcher(BlockingQueue<Request<?>> queue, |

有点和CacheDispatcher类似, 解读一下代码: 首先从网络请求的队列中取出一个request, 如果这个队列为空的话就会阻塞, 但是一旦队列中添加了request, 就能取出来。接着, 如果这时候request被取消了, 那就立刻结束。如果没有被取消, 那就执行网络请求, 这个过程可能又会阻塞。得到网络请求的响应之后, 如果服务器返回了304, 那就结束这个请求。如果不是304, 那就根据request解析这个网络请求。如果request允许被缓存, 那就将解析后的response保存到磁盘, 最后将这个response分发到主线程。我们在看看具体的网络请求是怎样的吧。查看mNetwork.performRequest(request)方法。

1 |

|

代码好长啊, 我就大概说明一下, 就是使用了一开始的BaseHttpStack执行了网络请求, 如果想看HttpUrlConnection的具体请求过程, 还是要从mBaseHttpStack.executeRequest(request, additionalRequestHeaders)这个方法点进去看, 这里就不再赘述了。

请求的结束和取消

先看Request.finish(String)方法

1 | void finish(final String tag) { |

看一下RequestQueue.finish(Request

1 | <T> void finish(Request<T> request) { |

这里首先从mCurrentRequests里面移除了request, 然后回调所有的RequestFinishedListener, 这个mFinishedListeners是从request的一个可选方法传进来的, 反正我一般不传

1 | public <T> void addRequestFinishedListener(RequestFinishedListener<T> listener) { |

再看一下RequestQueue.cancelAll(Object)方法

1 | public void cancelAll(final Object tag) { |

其中cancalAll(RequestFilter filter)中的filter.apply(request)方法其实就是调用的cancalAll(final Object tag)中的return request.getTag() == tag;这条语句

结语

Volley的源码分析终于差不多了, 一些细枝末节我也暂且不去追究了。其实读源码的目的主要是吸取框架的思想和思路, 我们提倡不重复造轮子, 但是不等于不需要知道轮子是如何制造出来的。但有时候最好的学习方法就是自己亲身去体验一下轮子的制造过程(重复造轮子)😂

github repo: https://github.com/mundane799699/AndroidProjects/tree/master/VolleyDemo